by Li Yang Ku (Gooly)

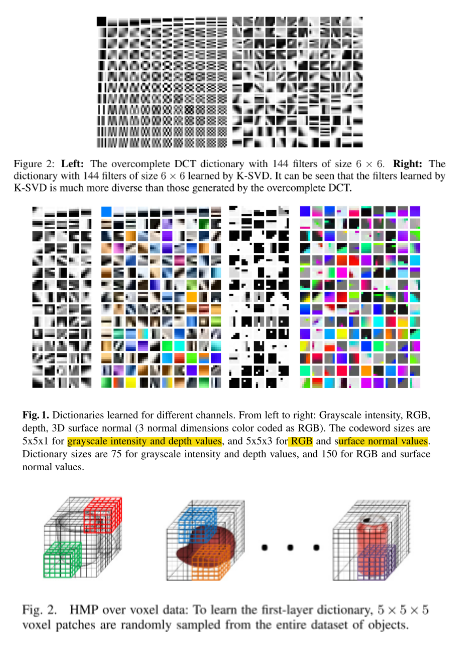

I’ve been reading some of Dieter Fox’s publications recently and a series of work on Hierarchical Matching Pursuit (HMP) caught my eye. There are three papers that is based on HMP, “Hierarchical Matching Pursuit for Image Classification: Architecture and Fast Algorithms”, “Unsupervised feature learning for RGB-D based object recognition” and “Unsupervised Feature Learning for 3D Scene Labeling”. In all 3 of these publications, the HMP algorithm is what it is all about. The first paper, published in 2011, deals with scene classification and object recognition on gray scale images; the second paper, published in 2012, takes RGBD image as input for object recognition; while the third paper, published in 2014, further extends the application to scene recognition using point cloud input. The 3 figures below are the feature dictionaries used in these 3 papers in chronicle order.

One of the center concept of HMP is to learn low level and mid level features instead of using hand craft features like SIFT feature. In fact the first paper claims that it is the first work to show that learning features from the pixel level significantly outperforms those approaches built on top of SIFT. Explaining it in a sentence, HMP is an algorithm that builds up a sparse dictionary and encodes it hierarchically such that meaningful features preserves. The final classifier is simply a linear support vector machine, so the magic is mostly in sparse coding. To fully understand why sparse coding might be a good idea we have to go back in time.

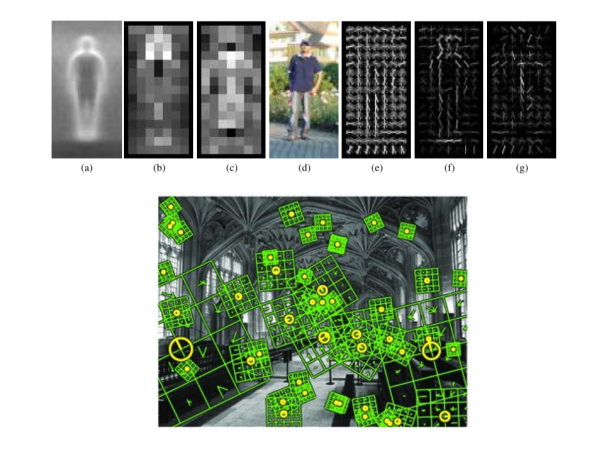

Back in the 50’s, Hubel and Wiesel’s work on discovering Gabor filter like neurons in the cat brain really inspired a lot of people. However, the community thought the Gabor like filters are some sort of edge detectors. This discovery leads to a series of work done on edge detection in the 80’s when digital image processing became possible on computers. Edge detectors such as Canny, Harris, Sobel, Prewitt, etc are all based on the concept of detecting edges before recognizing objects. More recent algorithms such as Histogram of Oriented Gradient (HOG) are an extension of these edge detectors. An example of HOG is the quite successful paper on pedestrian detection “Histograms of oriented gradients for human detection” (See figure below).

If we move on to the 90’s and 2000’s, SIFT like features seems to have dominated a large part of the Computer Vision world. These hand-craft features works surprisingly well and lead to many real applications. These type of algorithms usually consist of two steps, 1) detect interesting feature points (yellow circles in the figure above) , 2) generate an invariant descriptor around it (green check boards in the figure above). One of the reasons it works well is that SIFT only cares interest points, therefore lowering the dimension of the feature significantly. This allows classifiers to require less training samples before it can make reasonable predictions. However, throwing away all those geometry and texture information is unlikely how we humans see the world and will fail in texture-less scenarios.

In 1996, Olshausen showed that by adding a sparse constraint, gabor like filters are the codes that best describe natural images. What this might suggest is that Filters in V1 (Gabor filters) are not just edge detectors, but statistically the best coding for natural images under the sparse constraint. I regard this as the most important proof that our brain uses sparse coding and the reason it works better in many new algorithms that uses sparse coding such as the HMP. If you are interested in why evolution picked sparse coding, Jeff Hawkins has a great explanation in one of his talks (at 17:33); besides saving energy, it also helps generalizing and makes comparing features easy. Andrew Ng also has a paper “The importance of encoding versus training with sparse coding and vector quantization” on analyzing which part of sparse coding leads to better result.

Leave a reply to Distributed Code or Grandmother Cells: Insights From Convolutional Neural Networks | the Serious Computer Vision Blog Cancel reply