Category: brain

-

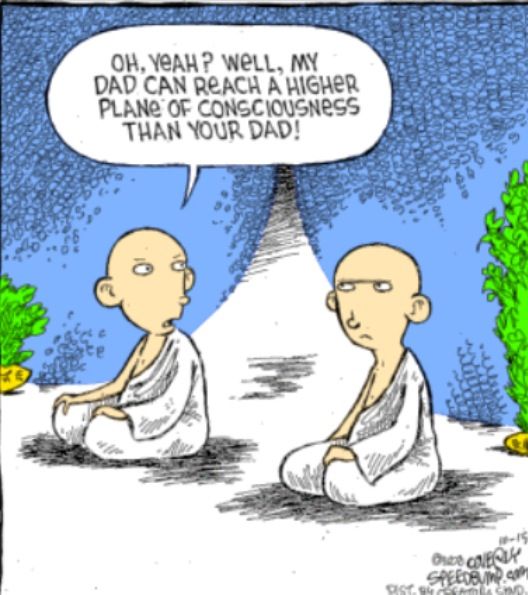

Consciousness and Intelligence

(By Li Yang Ku) In the past I’ve always avoided to make comments about consciousness. My view was that due to consciousness being internal to ourselves it is extremely difficult if not impossible to evaluate scientifically. Also, why talk about consciousness when we couldn’t even understand intelligence? However, some recent readings have changed my view…

-

Why the Idea That Our Brain has an Area Dedicated Just for Faces is Likely Wrong

by Li Yang Ku (Gooly) I was reading a neuroscience text book recently and came across a paragraph about the discovery of the Fusiform Face Area (FFA) in human brain. It was not news to me that there is a face area in the brain that activates when a face is observed, but I was…

-

Training a Rap Machine

by Li Yang Ku (Gooly) (link to the rap machine if you prefer to try it out first) In my previous post, I gave a short tutorial on how to use the Google AI platform for small garage projects. In this post, I am going to follow up and talk about how I built (or…

-

Looking Into Neuron Activities: Light Controlled Mice and Crystal Skulls

by Li Yang Ku (Gooly) It might feel like there aren’t that much progress in brain theories recently, we still know very little about how signals are processed in our brain. However, scientists have moved away from sticking electrical probes into cat brains and became quite creative on monitoring brain activities. Optogenetics techniques, which was first tested in early…