by Gooly (Li Yang Ku)

In one of my previous post I talked about the big picture of object recognition, which can be divided into two parts 1) transforming the image space 2) classifying and grouping. In this post I am gonna talk about a paper that clarifies object recognition and some of it’s pretty cool graphs explaining how our brains might transform the input image space. The paper also talked about why the idealized classification might not be what we want.

Lets start by explaining what’s a manifold.

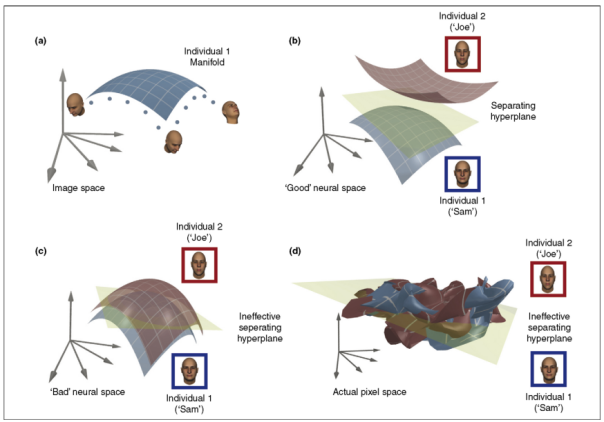

An object manifold is the set of images projected by one object in the image space. Since each image is a point in the image space and an object can project similar images with infinitely small differences, the points form a continuous surface in the image space. This continuous surface is the object’s manifold. Figure (a) above is an idealized manifold generated by a specific face. When the face is viewed from different angles the projected point move around on the continuous manifold. Although the graph is drew in 3D one should keep in mind that it is actually in a much larger dimension space. A 576000000 dimension space if consider human eyes to be 576 mega pixel. Figure (b) shows another manifold from another face, in this space the two individuals can be separated easily by a plane. Figure (c) shows another space which the two faces would be hard to separate. Note that these are ideal spaces that is possibly transformed from the original image space by our cortex. If the shapes are that simple, object recognition would be easy. However, the actual stuff we get is in Figure (d). The object manifolds from two objects are usually tangled and intersect in multiple spots. However the two image space are not the same, therefore it is possible that through some non linear operation we can transform figure (d) to something more like figure (c).

One interesting point this paper made is that the traditional view that there is a neuron that represents an object is probably wrong. Instead of having a grandmother cell (yes.. that’s how they called it) that represents your grandma, our brain might actually represents her with a manifold. Neurologically speaking, a manifold could be a set of neurons that have a certain firing pattern. This is related to the sparse encoding I talked about before and is consistent with Jeff Hawkins’ brain theory. (See his talk about sparse distribution representation around 17:15)

The figure (b) and (c) above are the comparison between a manifold representation and a single cell representation. What is being emphasized is that object recognition is more a task of transforming the space rather than classification.

Leave a comment